Configure the Kubernetes cloud provider

This document describes how to configure the Kubernetes provider for YugabyteDB universes using YugabyteDB Anywhere. If no cloud providers are configured in YugabyteDB Anywhere yet, the main Dashboard page prompts you to configure at least one cloud provider.

Prerequisites

To run YugabyteDB universes on Kubernetes, all you need to provide in YugabyteDB Anywhere is your Kubernetes provider credentials. YugabyteDB Anywhere uses those credentials to automatically provision and de-provision the pods that run YugabyteDB.

Before you install YugabyteDB on a Kubernetes cluster, perform the following:

- Create a

yugabyte-platform-universe-managementservice account. - Create a

kubeconfigfile of the earlier-created service account to configure access to the Kubernetes cluster.

This needs to be done for each Kubernetes cluster if you are doing a multi-cluster setup.

Service account

The secret of a service account can be used to generate a kubeconfig file. This account should not be deleted once it is in use by YugabyteDB Anywhere.

Set the YBA_NAMESPACE environment variable to the namespace where your YugabyteDB Anywhere is installed, as follows:

export YBA_NAMESPACE="yb-platform"

Note that the YBA_NAMESPACE variable is used in the commands throughout this document.

Run the following kubectl command to apply the YAML file:

export YBA_NAMESPACE="yb-platform"

kubectl apply -f https://raw.githubusercontent.com/yugabyte/charts/master/rbac/yugabyte-platform-universe-management-sa.yaml -n ${YBA_NAMESPACE}

Expect the following output:

serviceaccount/yugabyte-platform-universe-management created

The next step is to grant access to this service account using ClusterRoles and Roles, as well as ClusterRoleBindings and RoleBindings, thus allowing it to manage the YugabyteDB universe's resources for you.

The namespace in the following commands needs to be replaced with the correct namespace of the previously created service account.

The tasks you can perform depend on your access level.

Global Admin can grant broad cluster level admin access by executing the following command:

export YBA_NAMESPACE="yb-platform"

curl -s https://raw.githubusercontent.com/yugabyte/charts/master/rbac/platform-global-admin.yaml \

| sed "s/namespace: <SA_NAMESPACE>/namespace: ${YBA_NAMESPACE}"/g \

| kubectl apply -n ${YBA_NAMESPACE} -f -

Global Restricted can grant access to only the specific cluster roles to create and manage YugabyteDB universes across all the namespaces in a cluster using the following command:

export YBA_NAMESPACE="yb-platform"

curl -s https://raw.githubusercontent.com/yugabyte/charts/master/rbac/platform-global.yaml \

| sed "s/namespace: <SA_NAMESPACE>/namespace: ${YBA_NAMESPACE}"/g \

| kubectl apply -n ${YBA_NAMESPACE} -f -

This contains ClusterRoles and ClusterRoleBindings for the required set of permissions.

Namespace Admin can grant namespace-level admin access by using the following command:

export YBA_NAMESPACE="yb-platform"

curl -s https://raw.githubusercontent.com/yugabyte/charts/master/rbac/platform-namespaced-admin.yaml \

| sed "s/namespace: <SA_NAMESPACE>/namespace: ${YBA_NAMESPACE}"/g \

| kubectl apply -n ${YBA_NAMESPACE} -f -

If you have multiple target namespaces, then you have to apply the YAML in all of them.

Namespace Restricted can grant access to only the specific roles required to create and manage YugabyteDB universes in a particular namespace. Contains Roles and RoleBindings for the required set of permissions.

For example, if your goal is to allow YugabyteDB Anywhere to manage YugabyteDB universes in the namespaces yb-db-demo and yb-db-us-east4-a (the target namespaces), then you need to apply in both the target namespaces, as follows:

export YBA_NAMESPACE="yb-platform"

curl -s https://raw.githubusercontent.com/yugabyte/charts/master/rbac/platform-namespaced.yaml \

| sed "s/namespace: <SA_NAMESPACE>/namespace: ${YBA_NAMESPACE}"/g \

| kubectl apply -n ${YBA_NAMESPACE} -f -

kubeconfig file

You can create a kubeconfig file for the previously created yugabyte-platform-universe-management service account as follows:

-

Run the following

wgetcommand to get the Python script for generating thekubeconfigfile:wget https://raw.githubusercontent.com/YugaByte/charts/master/stable/yugabyte/generate_kubeconfig.py -

Run the following command to generate the

kubeconfigfile:export YBA_NAMESPACE="yb-platform" python generate_kubeconfig.py -s yugabyte-platform-universe-management -n ${YBA_NAMESPACE}Expect the following output:

Generated the kubeconfig file: /tmp/yugabyte-platform-universe-management.conf -

Use this generated

kubeconfigfile as thekubeconfigin the YugabyteDB Anywhere Kubernetes provider configuration.

Select the Kubernetes service

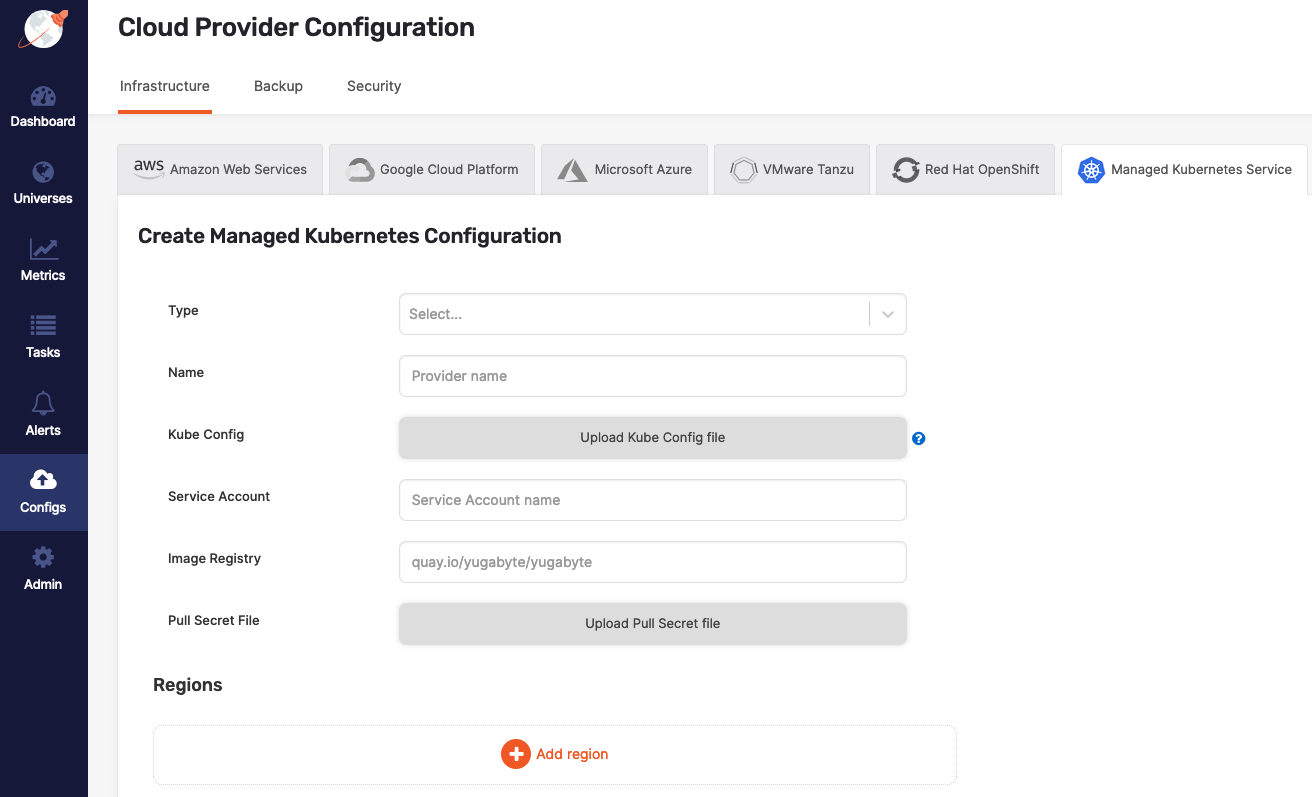

In the YugabyteDB Anywhere UI, navigate to Configs > Cloud Provider Configuration > Managed Kubernetes Service and select one of the Kubernetes service providers using the Type field, as per the following illustration:

Configure the cloud provider

Continue configuring your Kubernetes provider as follows:

- Specify a meaningful name for your configuration.

- Choose one of the following ways to specify Kube Config for an availability zone:

- Specify at provider level in the provider form. If specified, this configuration file is used for all availability zones in all regions.

- Specify at zone level in the region form. This is required for multi-az or multi-region deployments. If the zone is in a different Kubernetes cluster than YugabyteDB Anywhere, a zone-specific

kubeconfigfile needs to be passed.

- In the Service Account field, provide the name of the service account which has necessary access to manage the cluster (see Create cluster).

- In the Image Registry field, specify from where to pull the YugabyteDB image. Accept the default setting, unless you are hosting the registry, in which case refer to steps described in Pull and push YugabyteDB Docker images to private container registry.

- Use Pull Secret File to upload the pull secret to download the image of the Enterprise YugabyteDB that is in a private repository. Your Yugabyte sales representative should have provided this secret.

Configure region and zones

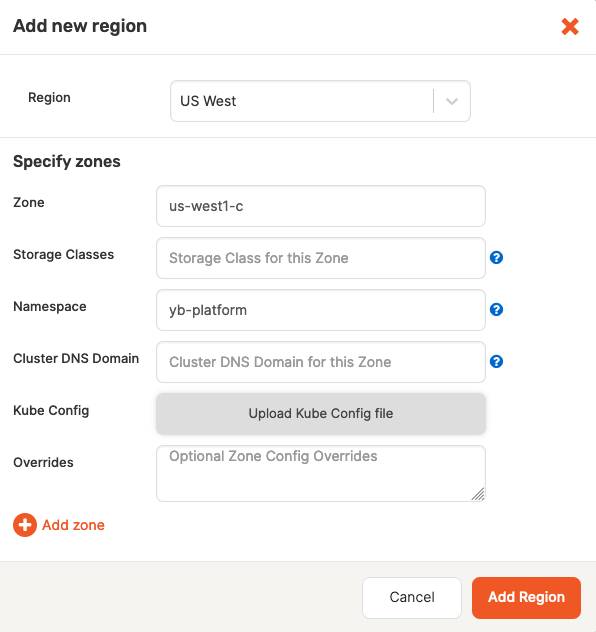

Continue configuring your Kubernetes provider by clicking Add region and completing the Add new region dialog shown in the following illustration:

-

Use the Region field to select the region.

-

Use the Zone field to select a zone label that should match the value of failure domain zone label on the nodes.

topology.kubernetes.io/zonewould place the pods in that zone. -

Optionally, use the Storage Class field to enter a comma-delimited value. If you do not specify this value, it would default to standard. You need to ensure that this storage class exists in your Kubernetes cluster and takes into account storage class considerations.

-

Use the Namespace field to specify the namespace. If provided service account has the

Cluster Adminpermissions, you are not required to complete this field. The service account used in the providedkubeconfigfile should have access to this namespace. -

Use Kube Config to upload the configuration file. If this file is available at the provider level, you are not required to supply it.

-

Complete the Overrides field using one of the provided options. If you do not specify anything, YugabyteDB Anywhere uses defaults specified inside the Helm chart. For additional information, see Open source Kubernetes.

-

Click Add Zone and complete the corresponding portion of the dialog. Notice that there are might be multiple zones.

-

Finally, click Add Region, and then click Save to save the configuration. If successful, you will be redirected to the table view of all configurations.

Overrides

The following overrides are available:

-

Overrides to add service-level annotations:

serviceEndpoints: - name: "yb-master-service" type: "LoadBalancer" annotations: service.beta.kubernetes.io/aws-load-balancer-internal: "0.0.0.0/0" app: "yb-master" ports: ui: "7000" - name: "yb-tserver-service" type: "LoadBalancer" annotations: service.beta.kubernetes.io/aws-load-balancer-internal: "0.0.0.0/0" app: "yb-tserver" ports: ycql-port: "9042" yedis-port: "6379" ysql-port: "5433" -

Overrides to disable LoadBalancer:

enableLoadBalancer: False -

Overrides to change the cluster domain name:

domainName: my.clusterYugabyteDB servers and other components communicate with each other using the Kubernetes Fully Qualified Domain Names (FQDN). The default domain is

cluster.local. -

Overrides to add annotations at StatefulSet level:

networkAnnotation: annotation1: 'foo' annotation2: 'bar' -

Overrides to add custom resource allocation for YB-Master and YB-TServer pods:

resource: master: requests: cpu: 2 memory: 2Gi limits: cpu: 2 memory: 2Gi tserver: requests: cpu: 2 memory: 4Gi limits: cpu: 2 memory: 4GiThis overrides instance types selected in the YugabyteDB Anywhere universe creation flow.

-

Overrides to enable Istio compatibility:

istioCompatibility: enabled: trueThis is required when Istio is used with Kubernetes.

-

Overrides to publish node IP as the server broadcast address.

By default, YB-Master and YB-TServer pod fully-qualified domain names (FQDN) are used in the cluster as the server broadcast address. To publish the IPs of the nodes on which YB-TServer pods are deployed, add the following YAML to each zone override configuration:

tserver: extraEnv: - name: NODE_IP valueFrom: fieldRef: fieldPath: status.hostIP serverBroadcastAddress: "$(NODE_IP)" affinity: podAntiAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchExpressions: - key: app operator: In values: - "yb-tserver" topologyKey: kubernetes.io/hostname # Required to esure that the Kubernetes FQDNs are used for # internal communication between the nodes and node-to-node # TLS certificates are validated correctly gflags: master: use_private_ip: cloud tserver: use_private_ip: cloud serviceEndpoints: - name: "yb-master-ui" type: LoadBalancer app: "yb-master" ports: http-ui: "7000" - name: "yb-tserver-service" type: NodePort externalTrafficPolicy: "Local" app: "yb-tserver" ports: tcp-yql-port: "9042" tcp-yedis-port: "6379" tcp-ysql-port: "5433" -

Overrides to run YugabyteDB as a non-root user:

podSecurityContext: enabled: true ## Set to false to stop the non-root user validation runAsNonRoot: true fsGroup: 10001 runAsUser: 10001 runAsGroup: 10001Note that you cannot change users during the Helm upgrades.

-

Overrides to add

tolerationsin YB-Master and YB-TServer pods:## Consider the node has the following taint: ## kubectl taint nodes node1 dedicated=experimental:NoSchedule- master: tolerations: - key: dedicated operator: Equal value: experimental effect: NoSchedule tserver: tolerations: []Tolerations work in combination with taints:

Taintsare applied on nodes andTolerationsare applied to pods. Taints and tolerations ensure that pods do not schedule onto inappropriate nodes. You need to setnodeSelectorto schedule YugabyteDB pods onto specific nodes, and then use taints and tolerations to prevent other pods from getting scheduled on the dedicated nodes, if required. For more information, see toleration and Toleration API. -

Overrides to use

nodeSelectorto schedule YB-Master and YB-TServer pods on dedicated nodes:## To schedule a pod on a node that has a topology.kubernetes.io/zone=asia-south2-a label nodeSelector: topology.kubernetes.io/zone: asia-south2-aFor more information, see nodeSelector and Kubernetes: Node Selector.

-

Overrides to add

affinityin YB-Master and YB-TServer pods:## To prevent scheduling of multiple master pods on single kubernetes node master: affinity: podAntiAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchExpressions: - key: app operator: In values: - "yb-master" topologyKey: kubernetes.io/hostname tserver: affinity: {}affinityallows the Kubernetes scheduler to place a pod on a set of nodes or a pod relative to the placement of other pods. You can usenodeAffinityrules to control pod placements on a set of nodes. In contrast,podAffinityorpodAntiAffinityrules provide the ability to control pod placements relative to other pods. For more information, see Affinity API. -

Overrides to add

annotationsto YB-Master and YB-TServer pods:master: podAnnotations: application: "yugabytedb" tserver: podAnnotations: {}The Kubernetes

annotationscan attach arbitrary metadata to objects. For more information, see Annotations. -

Overrides to add

labelsto YB-Master and YB-TServer pods:master: podLabels: environment: production app: yugabytedb prometheus.io/scrape: true tserver: podLabels: {}The Kubernetes

labelsare key-value pairs attached to objects. Thelabelsare used to specify identifying attributes of objects that are meaningful and relevant to you. For more information, see Labels. -

Preflight check overrides, such as DNS address resolution, disk IO, available port, ulimit:

## Default values preflight: ## Set to true to skip disk IO check, DNS address resolution, port bind checks skipAll: false ## Set to true to skip port bind checks skipBind: false ## Set to true to skip ulimit verification ## SkipAll has higher priority skipUlimit: falseFor more information, see Helm chart: Prerequisites.

-

Overrides to use a secret for LDAP authentication. Refer to Create secrets for Kubernetes.

Configure Kubernetes multi-cluster environment

If you plan to create multi-region YugabyteDB universes, you can set up Multi-Cluster Services (MCS) across your Kubernetes clusters. This section covers implementation specific details for setting up MCS on various cloud providers and service mesh tools.

YugabyteDB Anywhere support for MCS is in beta

The Kubernetes MCS API is currently in alpha, though there are various implementations of MCS which are considered to be stable. To know more, see API versioning in the Kubernetes documentation.

MCS support in YugabyteDB Anywhere is currently in Beta. Keep in mind following caveats:

- Universe metrics may not display correct metrics for all the pods.

- xCluster replication needs an additional manual step to work on OpenShift MCS.

Prepare Kubernetes clusters for GKE MCS

GKE MCS allows clusters to be combined as a fleet on Google Cloud. These fleet clusters can export services, which enables you to do cross-cluster communication. For more information, see Multi-cluster Services in the Google Cloud documentation.

To enable MCS on your GKE clusters, see Configuring multi-cluster Services. Note down the unique membership name of each cluster in the fleet, it will be used during the cloud provider setup in YugabyteDB Anywhere.

Prepare OpenShift clusters for MCS

Red Hat OpenShift Container Platform uses the Advanced Cluster Management for Kubernetes (RHACM) and its Submariner addon to enable MCS. At a very high level this involves following steps:

- Create a management cluster and install RHACM on it. For details, see Installing Red Hat Advanced Cluster Management for Kubernetes in the Red Hat documentation.

- Provision the OpenShift clusters which will be connected together.

Ensure that the CIDRs mentioned in the cluster configuration file atnetworking.clusterNetwork,networking.serviceNetwork, andnetworking.machineNetworkare non-overlapping across the multiple clusters. You can find more details about these options in provider-specific sections under the OpenShift Container Platform installation overview (look for sections named "Installing a cluster on [provider name] with customizations"). - Import the clusters into RHACM as a cluster set, and install the Submariner add-on on them. For more information, see Configuring Submariner.

- Note down the cluster names from the cluster set, as these will be used during the cloud provider setup in YugabyteDB Anywhere.

Prepare Kubernetes clusters for Istio multicluster

An Istio service mesh can span multiple clusters, which allows you to configure MCS. It supports different topologies and network configurations. To install an Istio mesh across multiple Kubernetes clusters, see Install Multicluster in the Istio documentation.

The Istio configuration for each cluster should have following options:

apiVersion: install.istio.io/v1alpha1

kind: IstioOperator

spec:

meshConfig:

defaultConfig:

proxyMetadata:

ISTIO_META_DNS_CAPTURE: "true"

ISTIO_META_DNS_AUTO_ALLOCATE: "true"

# rest of the configuration…

Refer to Multi-Region YugabyteDB Deployments on Kubernetes with Istio for a step-by-step guide and an explanation of the options being used.

Configure the cloud provider for MCS

Once you have the cluster set up, follow the instructions in Configure the Kubernetes cloud provider, and refer to this section for region and zone configuration required for multi-region universes.

Configure region and zone for GKE MCS

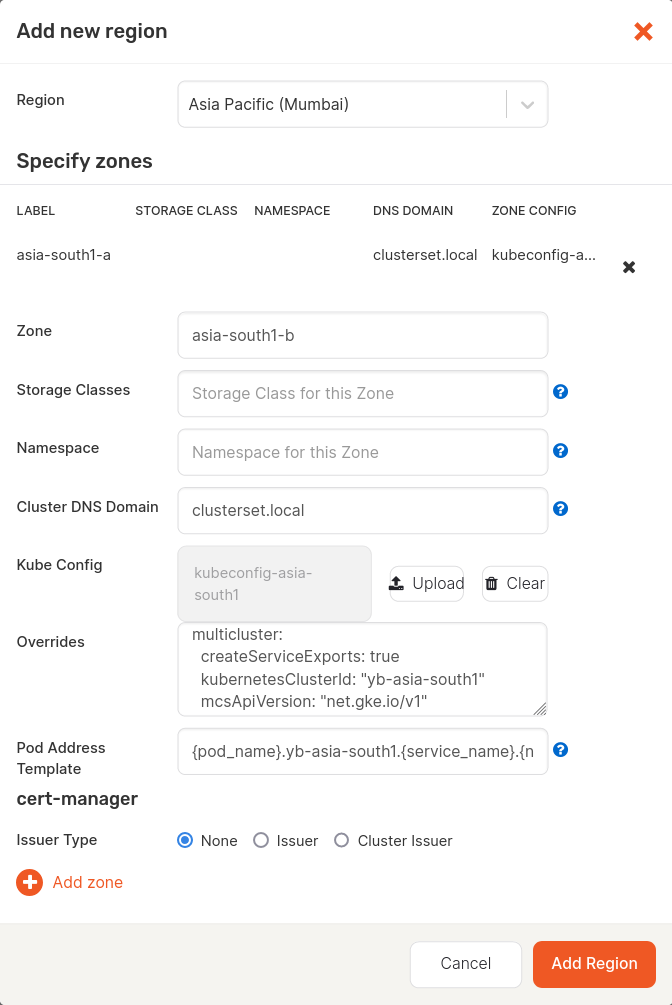

Follow the steps in Configure region and zones and set values for all the zones from your Kubernetes clusters connected via GKE MCS as follows:

- Specify fields such as Region, Zone, and so on as you would normally.

- Set the Cluster DNS Domain to

clusterset.local. - Upload the correct Kube Config of the cluster.

- Set the Pod Address Template to

{pod_name}.<cluster membership name>.{service_name}.{namespace}.svc.{cluster_domain}, where the<cluster membership name>is the membership name of the Kubernetes cluster set during the fleet setup. - Set the Overrides as follows:

multicluster: createServiceExports: true kubernetesClusterId: "<cluster membership name>" mcsApiVersion: "net.gke.io/v1"

For example, if your cluster membership name is yb-asia-south1, then the Add new region screen would look as follows:

Configure region and zones for OpenShift MCS

Follow the instructions in Configure the OpenShift cloud provider and Create a provider in YugabyteDB Anywhere. For all the zones from your OpenShift clusters connected via MCS (Submariner), add a region as follows:

- Specify fields such as Region, Zone, and so on as you would normally.

- Set the Cluster DNS Domain to

clusterset.local. - Upload the correct Kube Config of the cluster.

- Set the Pod Address Template to

{pod_name}.<cluster name>.{service_name}.{namespace}.svc.{cluster_domain}, where the<cluster name>is the name of the OpenShift cluster set during the cluster set creation. - Set the Overrides as follows:

multicluster: createServiceExports: true kubernetesClusterId: "<cluster name>"

For example, if your cluster name is yb-asia-south1, then the values will be as follows:

- Pod Address Template

{pod_name}.yb-asia-south1.{service_name}.{namespace}.svc.{cluster_domain} - Overrides

multicluster: createServiceExports: true kubernetesClusterId: "yb-asia-south1"

Configure region and zones for Istio

Follow the steps in Configure region and zones and set values for all the zones from your Kubernetes clusters connected via Istio as follows.

- Specify fields such as Region, Zone, and so on as you would normally.

- Upload the correct Kube Config of the cluster.

- Set the Pod Address Template to

{pod_name}.{namespace}.svc.{cluster_domain}. - Set the Overrides as follows:

istioCompatibility: enabled: true multicluster: createServicePerPod: true